Calibrating Trust & Mitigating Bias in Clinical AI for Sub-Saharan Africa

- November 2, 2025

- 1 Like

- 359 Views

- 0 Comments

Executive Summary

The integration of Artificial Intelligence (AI) into healthcare holds transformative potential, particularly for resource-constrained health systems where it promises to augment diagnostic capabilities, optimize scarce resources, and personalize patient care. However, this promise is shadowed by a profound peril: the risk that AI systems, trained on globally unrepresentative data, will perpetuate and amplify existing health disparities. This report addresses the critical socio-technical challenges of implementing clinical AI in Sub-Saharan Africa (SSA), focusing on the dangerous interplay between algorithmic bias inherent in AI models and the cognitive biases of the clinicians who use them.

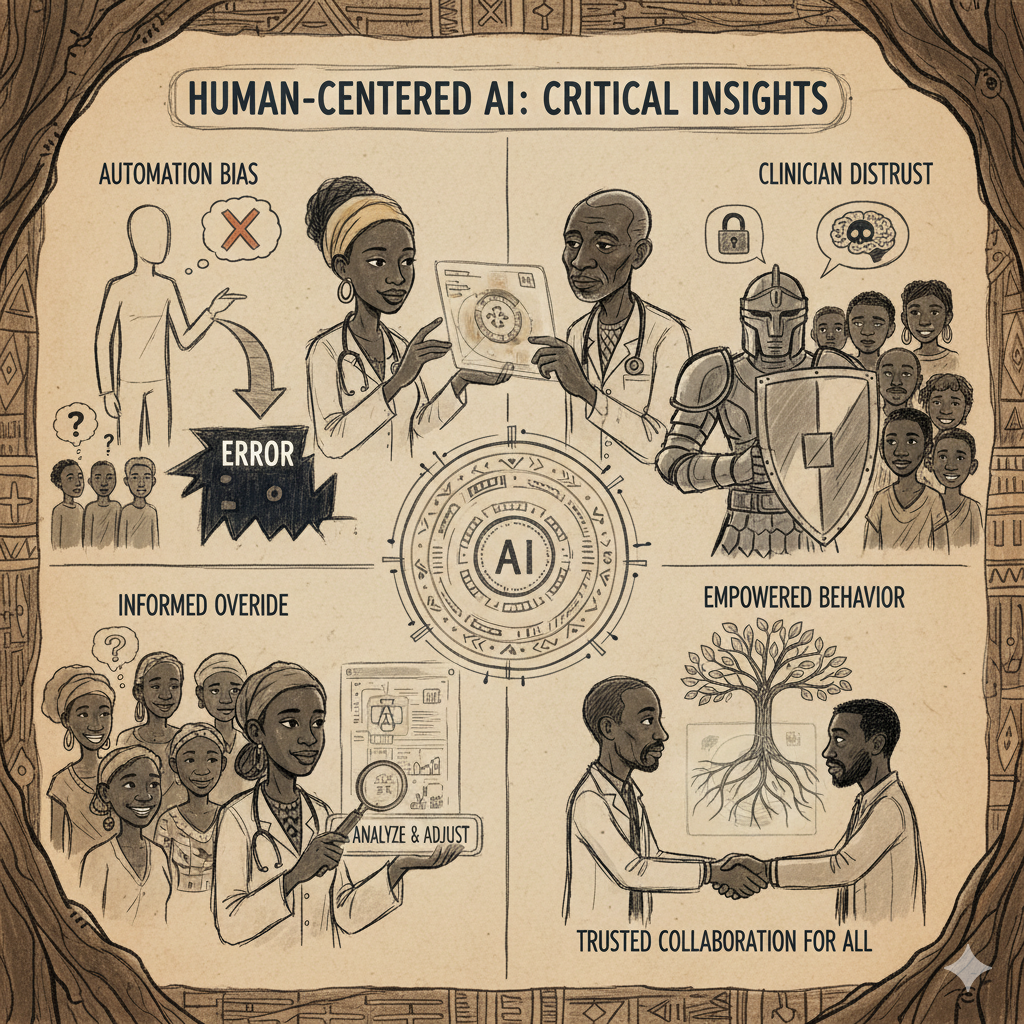

The central thesis of this analysis is that in the unique context of SSA—characterized by profound health inequities, infrastructural fragility, and a scarcity of local health data—the AI-clinician interface is not a peripheral feature but a central safety mechanism. Human factors, such as “automation bias” (the tendency to over-rely on automated recommendations) and justifiable clinician distrust, can either amplify the harm caused by biased algorithms or lead to the complete abandonment of potentially beneficial tools. Consequently, a failure to address the human-AI interaction can turn a tool intended to bridge equity gaps into a vector for deepening them.

This report deconstructs the mechanisms of this bias amplification and situates the problem within the specific socio-technical landscape of SSA’s healthcare ecosystem. It culminates in a proposal for a comprehensive, multi-phase research framework aimed at designing, developing, and validating a Human-Centered AI (HCAI) interface for clinical decision support. This proposed interface is architected to move beyond simple information delivery to actively foster critical analysis, calibrate clinician trust, and facilitate informed, safe overrides of AI recommendations. By embedding principles of explainability, transparency, and participatory design, this framework provides an actionable pathway toward developing clinical AI that is not only technologically sound but also ethically robust, contextually appropriate, and fundamentally aligned with the goal of advancing health equity for all, especially for the continent’s most underrepresented patient populations.

Introduction

Artificial intelligence is emerging as a transformative force in global health, with the potential to reshape healthcare delivery in unprecedented ways.1 By processing vast amounts of data far beyond human capacity, AI can accelerate medical discovery, enhance diagnostic accuracy, and facilitate the efficient allocation of precious healthcare resources.1 In regions facing significant healthcare challenges, these capabilities are not merely incremental improvements; they represent a potential paradigm shift, offering a pathway to bridge long-standing gaps in access and quality of care.3 However, this immense potential is accompanied by a significant and often underestimated risk. When designed and deployed without sufficient consideration for human values and contextual realities, AI can become a powerful vector for harm, inadvertently encoding, perpetuating, and even amplifying the very health disparities it is intended to solve.5

This report argues that the successful and ethical implementation of clinical AI in Sub-Saharan Africa is contingent upon a socio-technical approach that directly confronts the dangerous interplay between algorithmic bias and human cognitive factors. The uncritical adoption of AI models, often trained on data that fails to represent the genetic and social diversity of African populations, introduces a latent risk of inequitable performance. This latent risk is actualized at the point of care, where human factors—such as the cognitive shortcut of automation bias or the corrosive effect of clinician distrust—mediate the final clinical decision. We posit that a meticulously designed Human-Centered AI (HCAI) interface is the most critical intervention point to break this cycle. Such an interface must move beyond the simple presentation of information to actively foster critical analysis, calibrate clinician trust to an appropriate level, and facilitate informed overrides. In doing so, the interface itself becomes a crucial safeguard for patient safety and health equity, particularly for underrepresented and vulnerable patient groups.

To build this argument, this report will proceed in four parts. Section 1 will establish the theoretical groundwork, defining the core principles of Human-Centered AI and exploring the complex psychology of automation and trust in clinical practice. Section 2 will connect these human factors to the technical realities of algorithmic bias, detailing the feedback loop through which human and algorithmic biases can synergistically amplify inequity. Section 3 will ground this analysis in the specific, complex realities of Sub-Saharan Africa, highlighting the unique socio-technical and infrastructural challenges that make this region a particularly high-risk environment for AI deployment. Finally, Section 4 will synthesize these findings into a constructive proposal: a detailed, multi-phase research and design framework for developing and testing a human-centered clinical AI interface tailored to the needs and context of healthcare in Sub-Saharan Africa.

The Socio-Technical Challenge of Trust in Clinical AI

The interaction between a clinician and an AI-powered Clinical Decision Support System (CDSS) is far more than a simple exchange of information. It is a complex socio-technical process governed by principles of design, human psychology, and the delicate calibration of trust. Understanding this dynamic is the first step toward building AI systems that are not only powerful but also safe and effective in real-world clinical practice. This section establishes the theoretical foundation by defining the philosophy of Human-Centered AI (HCAI), examining the pervasive cognitive challenge of automation bias, and exploring the spectrum of clinician trust that is essential for successful human-AI collaboration.

The Philosophy and Principles of Human-Centered AI (HCAI)

Human-Centered AI is a design philosophy and technical approach that seeks to align the development of artificial intelligence with human values and needs.2 It represents a departure from purely task-oriented or “automated” AI, which prioritizes efficiency and problem-solving without necessary human oversight.10 Instead, HCAI places the needs, experiences, and well-being of individuals at the forefront of the entire AI lifecycle, from conception to deployment.9 The ultimate goal is to create AI systems that serve as collaborative tools to augment, amplify, and enhance human capabilities, rather than replacing human input and judgment.2

The core tenets of HCAI are rooted in a broader human-centered design tradition and emphasize several key principles. Foremost among these is the commitment to creating systems that are transparent, fair, and aligned with societal well-being.2 This requires adopting a holistic systems perspective that goes beyond the algorithm itself to consider the complex interactions between people, the tools and technology they use, the organizational environment, and the wider health system.11 The Association of American Medical Colleges (AAMC) operationalizes these ideas for medicine through a set of guiding principles, which include maintaining a human-centered focus that prioritizes the clinician-patient relationship, ensuring the ethical and transparent use of AI, and protecting patient data privacy.13

Practically, the HCAI framework provides a pathway for engineering systems that are Reliable, Safe, and Trustworthy (RST).16 Achieving this RST status is not an accident but the result of deliberate design choices and organizational commitments. It involves implementing specific technical practices, such as maintaining detailed audit trails to review system performance and analyze failures or near misses.16 It also requires fostering management strategies that cultivate a pervasive culture of safety, where reporting and learning from errors are encouraged.17 Finally, building trust necessitates structures of independent oversight that can verify claims and hold systems accountable.16 Professional bodies like the Institute of Electrical and Electronics Engineers (IEEE) are working to codify these principles into formal standards, emphasizing requirements for accountability in decision-making, transparency in system design and operation, and demonstrable competence of both the AI and its human users.18

The Psychology of Automation in Clinical Practice

While HCAI provides the guiding philosophy, its implementation must contend with the realities of human psychology. One of the most significant and well-documented challenges in human-automation interaction is automation bias (AB). This cognitive bias is defined as the human tendency to over-rely on or excessively favor suggestions from automated systems, often at the expense of one’s own judgment and even in the face of contradictory evidence from other sources.21 It functions as a mental shortcut, or heuristic, that leads users to reduce their own vigilant information seeking and processing, effectively outsourcing critical thinking to the machine.22

This phenomenon is not merely a theoretical concern; it has been shown to contribute to real and near-miss incidents in medicine and other safety-critical domains.21 In a clinical setting, automation bias can manifest in two primary forms of error:

- Errors of Commission: These occur when a clinician uncritically accepts and acts upon an incorrect recommendation from an AI system.24 A stark example would be a provider accepting a faulty AI-driven “malignant” classification for a benign finding and proceeding with an unnecessary and invasive procedure.21

- Errors of Omission: These happen when a clinician fails to recognize a problem or intervene in a timely manner because the automated system did not flag an issue.21 This could involve accepting an AI’s “non-urgent” triage assessment for a patient who subsequently suffers a preventable emergency.21

The propensity for a clinician to exhibit automation bias is not a fixed trait but is heavily influenced by a range of mediating factors. Research indicates that AB is particularly pronounced in tasks with high cognitive load and complexity, such as making a difficult diagnosis, where the effort required to manually verify the AI’s output is substantial.24 Environmental factors like time pressure, a ubiquitous stressor in many clinical settings, can further strain a clinician’s cognitive resources, making the heuristic of trusting the machine more appealing.25 A clinician’s level of experience also plays a role; while task-inexperienced users may be more susceptible to AB, even seasoned experts are not immune to its effects, particularly when fatigued or under pressure.22

The Spectrum of Clinician Trust: From Over-Reliance to Distrust

At the heart of the relationship between a clinician and an AI system lies the crucial element of trust. Trust acts as a critical mediator of behavior and is arguably the single strongest psychological factor driving over-reliance and automation bias.22 The central challenge in human-AI collaboration is therefore one of trust calibration.26 The goal is not to foster maximal, blind trust, but rather to cultivate an appropriate level of trust that is commensurate with the system’s proven capabilities and limitations. Miscalibrated trust can lead to two equally dangerous outcomes: misuse, in the form of over-reliance and automation bias, and disuse, where clinicians distrust and abandon a potentially valuable tool altogether.27

A significant body of evidence shows that both clinicians and patients harbor considerable distrust toward AI in healthcare, creating a major barrier to adoption.28 This distrust is not unfounded and stems from a combination of conceptual, technical, and humanistic challenges.

- Conceptual Challenges: A fundamental misunderstanding of AI’s capabilities can erode trust. AI systems, particularly those based on machine learning, do not “reason” in a human sense; they lack the “common sense” or “clinical intuition” that underpins expert medical judgment.31 When a system produces a recommendation that seems counterintuitive, this conceptual gap can lead to a perception of the AI as unreliable or illogical.

- Technical Challenges: The “black box” nature of many complex AI models is a primary source of distrust.23 When a system cannot explain the rationale behind its recommendations, it diminishes the clinician’s epistemic trust—their confidence in the knowledge underpinning the suggestion.31 This opacity forces the clinician into an uncomfortable binary choice of either accepting the advice on faith or rejecting it outright, precluding a more nuanced, collaborative decision-making process.

- Humanistic and Organizational Challenges: Trust is also shaped by the broader context of AI implementation. Widespread fears among healthcare workers about job displacement, a lack of adequate training and technical support from vendors post-deployment, and the experience of using products that were rushed to market without sufficient real-world testing all contribute to a climate of skepticism and distrust.28

Addressing this trust deficit requires a deliberate design strategy focused on cultivating appropriate reliance. This involves creating systems that are transparent about their own competence and limitations.26 Key design strategies include clearly setting user expectations during onboarding, displaying confidence levels or uncertainty estimates alongside outputs, and designing systems that can acknowledge and handle errors gracefully, for instance by providing clear paths for user feedback and correction.33

The relationship between system accuracy and user trust presents a fundamental and dangerous paradox. Evidence suggests that high levels of system accuracy are a primary driver in building user trust.24 However, this very trust can breed complacency. As users become more confident in an AI’s reliability, they are simultaneously less likely to vigilantly monitor its performance and less likely to detect the rare but potentially catastrophic failures when they do occur.24 This creates a precarious situation where the better an AI system performs most of the time, the more devastating its occasional errors can be, precisely because the human overseer has been conditioned to lower their guard. This dynamic reveals that designing for safety is not simply about maximizing algorithmic accuracy. It also requires designing an interface that actively works to maintain clinician vigilance and critical engagement, even—and especially—in the face of a highly reliable system.

Furthermore, while automation bias is a cognitive tendency, its emergence and impact are profoundly shaped by the socio-technical environment. Factors such as high patient workloads, administrative pressures, and intense time constraints are not merely individual stressors; they are systemic features of many clinical environments.24 These external pressures increase the cognitive load on clinicians, making the mental shortcut of deferring to an automated system more attractive. Therefore, any effective strategy to mitigate automation bias cannot focus solely on training clinicians to be more vigilant. It must adopt a systems-level approach, consistent with HCAI principles, that uses the AI interface itself as a tool to manage cognitive load while simultaneously scaffolding and prompting the critical thinking necessary for safe decision-making.11

The Amplification of Inequity: How Human and Algorithmic Biases Interact

While human factors shape how clinicians interact with AI, the technical characteristics of the AI itself determine the quality and fairness of the information being presented. When an algorithm is biased, it introduces a latent risk of inequitable care. This section explores how that latent risk is translated into tangible harm through the mechanism of human-AI interaction. It details the anatomy of algorithmic bias, explains the vicious cycle through which human and algorithmic biases reinforce each other, and posits that the Human-Computer Interaction (HCI) is the most critical locus for intervention.

Anatomy of Algorithmic Bias in Healthcare

Algorithmic bias in healthcare is not a simple technical glitch. It is a systemic issue that occurs when an AI system’s application compounds existing societal inequities related to factors such as socioeconomic status, race, ethnicity, or gender, thereby amplifying disparities in health systems.5 This bias can be dissected into three interconnected sources: data-driven bias, bias introduced during algorithm development, and bias stemming from human interpretation and interaction.8 It is a cumulative problem that can arise and be compounded at every stage of the AI lifecycle.6

The lifecycle of bias typically unfolds as follows:

- Data Collection and Selection: This is the most common and insidious source of bias. AI models learn from data, and if that data reflects historical and societal biases, the model will inevitably learn and reproduce those same biases. This can happen in several ways: training data may be missing or incomplete for certain demographic groups; it may be systematically skewed (e.g., measurement bias); or it may fail to account for confounding variables like social determinants of health.8 The result is a model whose performance is inherently unequal, functioning suboptimally for underrepresented populations from the very start.

- Model Development and Evaluation: Bias can be introduced or exacerbated during the model development process itself. For instance, a development team that prioritizes maximizing overall model performance metrics (like accuracy) may inadvertently create a model that performs very well on the majority population but fails catastrophically on minority subgroups, a discrepancy that can be hidden by the aggregate performance score.6 Furthermore, the choice of a proxy variable to predict an outcome can encode deep-seated biases. A well-known example is an algorithm that used healthcare costs as a proxy for health needs, which falsely concluded that Black patients were healthier than equally sick White patients simply because less money had historically been spent on their care.8

- Deployment and Generalization: An AI model’s performance is not static. A model that performs well on the data it was trained and validated on may experience a significant performance degradation when deployed in a new clinical setting and applied to a different patient population. This phenomenon, known as distributional shift or data shift, is a major challenge.6 If the deployment population differs significantly from the training population—for example, in its demographic or socioeconomic makeup—the model can fail in unpredictable and inequitable ways.6

The Vicious Cycle of Bias Amplification

The interaction between a biased algorithm and a human clinician prone to cognitive biases creates a dangerous feedback loop that can actively amplify health inequities. This mechanism represents the core socio-technical challenge of implementing AI in clinical practice.

The cycle of amplification works as follows: A biased algorithm—for instance, a dermatological AI trained predominantly on light-skinned individuals that is less accurate at detecting skin cancer in patients with darker skin 35—produces an erroneous recommendation (e.g., a false negative). A clinician, influenced by automation bias and perhaps facing time pressure, accepts this flawed recommendation without sufficient critical analysis or independent verification.21 The direct result is a substandard clinical decision—in this case, a missed diagnosis—that directly harms a patient from an already marginalized group, potentially leading to delayed treatment and worse health outcomes.6

This interaction does more than simply cause a single error; it can reinforce the flawed system. If the clinician’s uncritical acceptance of the AI’s output is logged by the system, it may be interpreted as a “successful” or “correct” human-AI interaction. This can mask the algorithm’s underlying failure and prevent the error from being flagged for model review and retraining. In this way, the human’s cognitive bias serves to validate the algorithm’s statistical bias, creating a pernicious feedback loop where each component reinforces the other’s flaws.6

Conversely, a different but equally problematic dynamic can emerge. If clinicians repeatedly encounter biased, irrelevant, or nonsensical outputs from an AI tool, it can lead to a well-founded and justified distrust, resulting in the complete abandonment of the system.28 This phenomenon of “disuse” means that even the potential benefits that the AI might offer for the majority population are lost. Furthermore, without user engagement and feedback, the underlying algorithmic biases are never identified or reported, leaving the system fundamentally broken and unimproved. This creates a “distrust spiral” where poor performance leads to disuse, which in turn prevents the feedback necessary to improve performance.

Human-Computer Interaction (HCI) as the Locus of Intervention

This analysis reveals that the point of interaction between the human and the AI—the user interface (UI)—is not a neutral conduit for information. The design of the UI is a powerful mediating factor that can either exacerbate the risk of bias amplification or serve as a crucial tool for its mitigation.45 A poorly designed interface can worsen automation bias by presenting AI-generated outputs as definitive, objective truths, with no indication of uncertainty or underlying evidence. Such a design encourages passive acceptance and discourages critical thought.

By contrast, an interface designed with the principles of HCI and human factors engineering can actively interrupt the vicious cycle of bias amplification. This involves moving beyond simple data presentation to create an environment that scaffolds critical thinking, provides transparent risk communication, and empowers clinicians to conduct a more thorough and critical assessment of AI recommendations.45 This reframes the problem. Instead of attempting to “fix the human” by admonishing them to be less biased, or trying to “fix the algorithm” in a technical vacuum, this approach focuses on “fixing the interaction” between them. The UI becomes the primary tool for calibrating trust and ensuring that the final clinical decision remains a product of informed human judgment, augmented—but not dictated—by the machine.

Algorithmic bias, once encoded into a model, represents a latent or potential risk for causing inequitable care. This latent risk is only translated into tangible clinical harm through the act of a decision. In the human-in-the-loop model, the clinician remains the final arbiter of that decision.21 If the clinician, through critical evaluation, identifies and overrides a biased AI recommendation, the potential harm is averted. However, if, due to the influence of automation bias, they uncritically accept the flawed recommendation, the harm is realized.22 Therefore, automation bias functions as the critical “last mile” in the causal chain that leads from biased training data to an inequitable patient outcome. This makes interventions aimed at mitigating automation bias at the point of care a matter of utmost importance for patient safety and health equity.43

The opacity of “black box” AI models presents a dual threat in this context. On one hand, their inscrutability makes it incredibly difficult for developers and users to identify, audit, and correct underlying algorithmic biases.23 On the other hand, this very lack of transparency actively undermines the clinician’s ability to overcome automation bias. When presented with a recommendation without a supporting rationale, a clinician is forced into a binary choice: either blindly trust the output (succumbing to automation bias) or reflexively reject it (leading to disuse). There is no pathway for a middle ground of informed, critical analysis and collaboration with the tool. This absence of an explanatory mechanism makes it nearly impossible for the user to achieve an appropriate calibration of trust. Consequently, explainability is not merely an ethical desideratum or a “nice-to-have” feature; it is a functional prerequisite for mitigating automation bias and enabling safe human-AI interaction in high-stakes environments like clinical medicine.

Contextualizing AI Implementation in Sub-Saharan Africa’s Healthcare Ecosystem

The theoretical challenges of bias and trust in clinical AI are universal, but their practical implications are profoundly shaped by the context in which the technology is deployed. Implementing AI in the healthcare ecosystems of Sub-Saharan Africa (SSA) presents a unique and complex set of challenges that differ significantly from those in high-income countries. A generic, one-size-fits-all approach to AI deployment is not only insufficient but also poses a grave risk of exacerbating the very inequities it aims to address. This section grounds the preceding analysis in the specific realities of SSA, examining the region’s health and technology landscape, the acute risk of “data colonialism,” and the compounding socio-technical barriers to trustworthy AI.

The Landscape of Health and Technology in SSA

The healthcare landscape in Sub-Saharan Africa is defined by a stark paradox of immense need and limited resources. The region shoulders a disproportionate 25% of the global disease burden while having access to only 3% of the world’s health workers.42 This long-standing challenge of infectious diseases is now being compounded by a rising tide of non-communicable diseases, creating a complex dual burden on health systems that are often underfunded, understaffed, and inaccessible to large segments of the population.50 Within this landscape, profound health disparities are the norm, not the exception. Deeply entrenched socioeconomic factors create stark divides in health outcomes, with significant gaps between urban and rural populations and formidable barriers to care linked to poverty and educational attainment.54

It is precisely because of these immense challenges that AI holds such transformative potential for the region. A growing number of case studies demonstrate the applicability of AI in addressing key bottlenecks. AI-powered models are being successfully used for disease surveillance and outbreak prediction for illnesses like malaria and COVID-19; for enhancing diagnostics in areas with few specialists, such as analyzing chest X-rays for tuberculosis or images for cervical cancer; and for optimizing the logistics of medical supply chains, including the use of drones for delivering blood and vaccines to remote areas.4

However, the path to widespread, effective AI adoption is fraught with fundamental infrastructural and systemic hurdles. Many parts of the region suffer from poor and unreliable internet coverage, a lack of durable electricity, and underdeveloped digital health foundations, such as interoperable Electronic Health Record (EHR) systems.52 These realities create a challenging environment for deploying sophisticated digital health technologies and necessitate context-specific solutions.

The “Data Colonialism” Risk and its Consequences

Perhaps the most critical challenge for developing equitable AI for SSA is the profound global imbalance in health data. A core tenet of machine learning is that an AI model is only as good as the data it is trained on. A critical and well-documented problem is the lack of systematic, well-structured, and locally relevant data from the continent. It is estimated that a staggering 99% of the global health data used for training AI models originates from outside of Africa, with only 1% coming from African countries themselves.63 This data gap is a direct consequence of the historical and ongoing underrepresentation of African populations in global clinical trials and medical research.64

This massive data imbalance leads to a high risk of a “performance cliff.” AI models that are developed and validated predominantly on data from non-African populations—which in practice often means populations of European ancestry—are likely to exhibit significantly poorer and less reliable performance when applied to the genetically and environmentally diverse populations of Africa.42 This is not a hypothetical concern. Studies have already demonstrated this effect in real-world applications; for example, an AI model for diabetic retinopathy screening, despite showing good results elsewhere, was found to have its overall effectiveness compromised by biases in the training data when deployed in Zambia.42

This dynamic creates a significant risk of what has been termed “data colonialism” or “digital colonialism.” The heavy dependence on externally developed AI technologies, which are trained on non-local data and often deployed without adequate adaptation to local clinical workflows or disease profiles, perpetuates a cycle of global inequality.49 It raises serious concerns about data sovereignty, the long-term sustainability of donor-driven projects, and the ethical implications of deploying potentially biased tools in vulnerable populations. This situation powerfully underscores the critical and urgent need for the development of robust, African-led innovation ecosystems and governance frameworks to ensure that AI serves the specific needs and contexts of the continent.49

Socio-Technical Barriers to Trustworthy AI: A Comparative Perspective

The confluence of challenges in Sub-Saharan Africa creates a unique risk profile for AI implementation that is distinct from that of high-income countries (HICs). The following table provides a comparative analysis of these socio-technical barriers, highlighting the nature and significance of the gap between these contexts.

| Challenge Area | High-Income Countries (HICs) | Sub-Saharan Africa (SSA) | Significance of the Gap |

| Data Availability & Quality | Large, digitized datasets exist but may lack diversity and contain historical biases. Interoperability of legacy EHR systems is a key challenge.53 | Paucity of locally generated, structured digital health data. Heavy reliance on paper records. Datasets are often incomplete, fragmented, and not representative of genetic and ethnic diversity.[42, 63, 71] | The fundamental building blocks for training locally relevant AI are missing, forcing reliance on biased external models and exacerbating the risk of performance failure. |

| Digital & Physical Infrastructure | Robust internet connectivity and stable electricity are widespread. Focus is on upgrading legacy IT systems. | Inconsistent electricity supply and low internet penetration (only 28% in SSA) are major barriers, especially in rural areas.[52, 63, 71, 72] | Cloud-based AI solutions, common in HICs, are often not viable. This necessitates development of offline or low-bandwidth capable systems (e.g., edge computing).[72, 73] |

| Workforce Capacity & AI Literacy | Shortage of AI specialists exists, but general digital literacy among clinicians is high. Focus is on AI-specific training. | A dual shortage of both healthcare professionals (“brain drain” of 20,000/year) and technology specialists. Limited digital literacy and technical capacity among users is a significant hurdle.[52, 60, 63, 72] | Implementation is hampered by a lack of personnel to develop, manage, and correctly use AI tools. “Task-shifting” to less-specialized workers using AI is promising but risky without proper oversight.[49] |

| Financial Investment & Sustainability | High levels of public and private investment (e.g., >$11 billion globally in 2021, led by US/UK). Focus is on ROI and market competition.63 | Heavy reliance on short-term, external donor funding. Limited national investment creates sustainability challenges and dependence on external partners.[52, 63, 69, 74] | Projects often fail after initial funding ends. Lack of local ownership and investment hinders long-term integration and maintenance of AI systems. |

| Governance & Regulation | Mature regulatory bodies (e.g., FDA, EMA) are actively developing AI-specific guidelines. Robust data privacy laws (e.g., GDPR, HIPAA) exist.[19, 75] | Governance frameworks are often nascent or absent. There’s a critical gap in health-specific AI governance and a lack of robust data protection legislation in many countries.[42, 49, 70, 76] | The absence of safeguards allows for AI implementation without necessary ethical and legal oversight, increasing risks of bias, data misuse, and lack of accountability.42 |

The challenges detailed in SSA are not merely additive; their interplay creates a multiplicative effect, generating a uniquely high-risk environment for AI deployment. A clinician in a well-resourced HIC setting may have the time, specialist support, and access to supplementary data to critically question a dubious AI recommendation. In stark contrast, a clinical officer or nurse in a rural SSA clinic often faces a much higher patient load, has limited access to specialist backup, and may be working with intermittent power or internet connectivity.52 This high-stress, resource-constrained environment makes that clinician far more susceptible to the cognitive pressures that lead to automation bias.24 This heightened human vulnerability is then combined with an AI tool that is, itself, more likely to be algorithmically biased due to its training on non-representative data.42 The risks thus compound at every level of the socio-technical system, from the data to the algorithm to the infrastructure to the end-user.

While the lack of entrenched legacy IT systems in SSA is sometimes framed as a “leapfrogging” opportunity—allowing the region to bypass older technologies and adopt modern, interoperable standards from the outset 53—this narrative is a double-edged sword. The absence of legacy systems also signifies an absence of the foundational digital health infrastructure, data standards, and regulatory capacity that are essential for the safe and effective deployment of any advanced technology.53 Without these fundamentals in place, the “leapfrogging” impulse can lead to the rapid, uncoordinated, and unregulated deployment of fragmented and unsustainable AI tools. This risks creating a new generation of technological and ethical problems before the institutional capacity to manage them is established. This reality underscores the urgent need for foundational governance and capacity-building to precede or, at the very least, accompany technological adoption, ensuring that progress is both sustainable and equitable.70

A Research Framework for a Human-Centered Clinical AI Interface

Moving from a diagnosis of the problem to a constructive solution requires a deliberate and context-aware approach to system design and evaluation. The preceding analysis demonstrates that the AI-clinician interface is the critical juncture where algorithmic and human biases converge. Therefore, designing this interface to promote critical thinking and appropriate trust is the most potent intervention for ensuring the safe and equitable use of clinical AI in Sub-Saharan Africa. This section proposes a comprehensive research framework to develop and validate such an interface, outlining its core design tenets, key features, and a rigorous, participatory methodology tailored to the SSA context.

Core Design Tenets for Promoting Critical Analysis

The design of the proposed AI interface will be guided by a set of core principles derived from Human-Centered AI and Human-Computer Interaction research. These tenets shift the focus from merely presenting an algorithmic output to facilitating a robust and well-reasoned clinical decision.

- From Accuracy to Actionability: The primary objective of the interface is not simply to deliver a prediction but to empower the clinician to make a high-quality, patient-centered decision. This requires a design that prioritizes user-centricity, transparency in how the AI works, and the actionability of the information provided.79 The clinician must be able to understand why the AI is making a recommendation and what they can do with that information.

- Human-in-Control Philosophy: The interface must be explicitly designed to augment and empower clinicians, reinforcing their role as the ultimate decision-making authority.2 The AI is a consultant, not a commander. This philosophy aligns with emerging regulatory principles, such as those proposed in the “Right to Override Act,” which mandates that AI outputs must not be a substitute for the independent, professional judgment of a healthcare professional.80

- Designing for Appropriate Reliance: The interface’s goal is not to maximize user trust but to calibrate it appropriately. This is achieved by designing a system that is honest and transparent about its own capabilities, limitations, and degree of uncertainty in any given case.26 By clearly communicating when it is confident and when it is not, the system provides the clinician with the necessary cues to adjust their level of scrutiny and reliance accordingly.

Key Interface Features for Explainability and Informed Overrides

To translate these design tenets into a functional tool, the interface will incorporate specific, evidence-based features designed to enhance explainability and streamline the process of overriding AI recommendations.

User-Centered Explainable AI (XAI)

The system must move beyond providing opaque, “black box” outputs. It will integrate XAI techniques designed not for AI experts, but for busy clinicians at the point of care. This means shifting from purely epistemic explanations (which describe the model’s internal mathematics) to pragmatic explanations that are directly relevant to the clinical context and user’s comprehension.79 The goal is to translate the model’s statistical reasoning into clinically meaningful and actionable insights.81 Key XAI features will include:

- Confidence Scores: For every major prediction or recommendation, the interface will display a quantitative confidence score (e.g., “85% confidence in this diagnosis”) or a qualitative level (e.g., “High Confidence”). This immediately signals to the user the model’s own assessment of its uncertainty, prompting greater scrutiny for low-confidence outputs.33

- Feature Attribution: The interface will visually highlight the key patient data points—such as specific lab values, demographic factors, or symptoms from the clinical notes—that most heavily influenced the AI’s recommendation.81 This allows the clinician to quickly assess whether the AI is focusing on clinically relevant factors or being swayed by spurious correlations.

- Counterfactual Explanations: Where feasible, the system will provide “what-if” style explanations. For example, alongside a high-risk score, it might state, “The risk score would be in the medium range if the patient’s systolic blood pressure were below 140 mmHg.” This makes the model’s logic more intuitive and provides actionable information for patient management.37

- Progressive Disclosure: To avoid overwhelming the user with information and increasing cognitive load, explanations will be layered. The interface will initially present a high-level summary of its findings. The user will then have clear, intuitive controls to “drill down” into more detailed explanations, such as feature attributions or counterfactuals, only if they require them.84

The Informed Override and Feedback Mechanism

A central feature of the human-in-control philosophy is a robust and seamless override mechanism. Overriding an AI recommendation should not be treated as an error or an exception but as a core, expected part of the clinical workflow.

- Low-Friction Override: The UI will feature a clear and easily accessible “override” or “disagree” button associated with every major AI recommendation. The act of overriding will be designed to be as simple and quick as possible to minimize workflow disruption.80

- Structured Override Documentation: Crucially, the act of overriding will trigger a simple, structured documentation process. Instead of a free-text box, the clinician will be prompted to select one or more reasons for the override from a pre-defined, context-sensitive list (e.g., “Recommendation contradicts physical exam findings,” “Concern about underlying data quality,” “Patient context/preferences not captured by model”). This simple step transforms a subjective decision into a valuable, structured data point for system evaluation.

- Closed-Loop Feedback Integration: This structured override data is the lynchpin of a continuous learning system. The data must be aggregated, anonymized, and regularly fed back to a multidisciplinary AI governance committee and the technical development team.80 Analyzing patterns in overrides (e.g., a specific recommendation being frequently overridden for a particular patient demographic) provides the most powerful signal for identifying hidden algorithmic biases, highlighting workflow integration problems, and guiding future model retraining and UI improvements. To encourage honest and frequent use of this feature, policies ensuring the anonymity of the reporting clinician and providing whistleblower protections are essential.80

A Participatory Research Methodology for the SSA Context

The development and evaluation of this interface cannot happen in a laboratory, detached from the realities of clinical practice in SSA. A phased, mixed-methods research methodology, grounded in the principles of participatory design and tailored for low-resource settings, is required. The following framework outlines a comprehensive approach, from initial needs assessment to real-world implementation.

| Research Phase | Key Activities | Participants | HCAI Principles Applied | Key Evaluation Metrics |

| Phase 1: Contextual Inquiry & Co-Design | Semi-structured interviews; ethnographic workflow observation; “Tripartite Partnership” workshops involving designers, providers, and community members [85]; development of user personas and journey maps to model clinical workflows.[86, 87] | Clinicians (nurses, clinical officers, physicians), health administrators, IT staff, community representatives/patient advocates from a target region in SSA. | Co-design 11, Stakeholder Engagement [36, 88], Understanding User Needs.10 | Qualitative themes on clinical workflows, decision-making pain points, perceptions of trust and technology, and specific information needs. Definition of context-specific use cases and user requirements. |

| Phase 2: Iterative Prototyping & Usability Testing | Development of low-fidelity (paper) and high-fidelity (interactive) prototypes of the interface. Heuristic evaluation and cognitive walkthroughs with target users. Think-aloud protocols during task completion to assess comprehension and utility of XAI features.[86, 89] | Clinicians from the target user group (e.g., nurses, clinical officers). HCI/UX experts. | Iterative Design [12, 79], Usability & Accessibility [32], Transparency.[82, 90] | System Usability Scale (SUS) scores; task completion rates and times; error rates; qualitative feedback on the clarity, trustworthiness, and utility of explanations; identification of UI-induced confusion or workflow friction. |

| Phase 3: Pilot Study in a Simulated Clinical Environment | A laboratory-based randomized controlled trial where clinicians diagnose a set of curated clinical vignettes. Vignettes will include cases where the AI is correct, incorrect, and biased (i.e., systematically incorrect for specific demographics). Participants will be randomized to use the AI-CDSS or a control interface. | A larger cohort of clinicians, stratified by profession (nurse, officer) and experience level. | Human Agency & Oversight [16, 82], Accountability [20, 90], Safety.[91] | Primary: Rate of “negative consultations” (uncritical acceptance of incorrect AI advice), a direct measure of automation bias.[22, 25] Secondary: Overall diagnostic accuracy; time to decision; frequency and appropriateness of overrides; subjective trust scores (pre/post-task); measures of verification intensity (e.g., number of clicks, time spent reviewing data).[92] |

| Phase 4: Real-World Feasibility & Implementation Study | A controlled, phased rollout of the validated interface in a live clinical setting (e.g., a single primary care clinic or hospital department). Mixed-methods monitoring of system usage, clinical outcomes, and feedback channels over a 6- to 12-month period. | Clinicians, patients, and administrators at the designated pilot site(s). | Monitoring & Evaluation [13, 15], Fairness [82, 90], Continuous Improvement.[37] | System adoption and usage rates; frequency of overrides and analysis of documented reasons; qualitative feedback from the local AI governance committee 80; pre/post analysis of key clinical quality and safety metrics; ongoing evaluation of fairness metrics (e.g., model performance and clinician agreement rates across demographic subgroups).[93] |

Conclusion

The deployment of artificial intelligence in Sub-Saharan Africa’s healthcare systems represents a critical inflection point. Undertaken with care, foresight, and a deep commitment to equity, AI can be a powerful tool to augment overburdened health workers and narrow persistent gaps in care. However, if pursued as a purely technical endeavor—divorced from the social, cultural, and infrastructural realities of the continent—it risks becoming an engine of inequity, amplifying existing biases and harming the very populations it is meant to serve. This report has argued that the AI-clinician interface is the critical site where these risks converge and must be confronted. The latent dangers of biased algorithms and the active dangers of human cognitive biases meet at the screen, and it is through the careful design of this interaction that safety can be engineered.

The proposed research framework offers a tangible pathway to translate the high-level principles of Human-Centered and Trustworthy AI into a practical, context-aware clinical tool. It moves beyond abstract ethical guidelines to a concrete, participatory methodology for building and validating an interface that actively promotes critical thinking, calibrates trust, and empowers clinicians. This is not merely a technical project but a fundamentally socio-technical one, requiring deep and sustained engagement with clinicians, patients, and communities from the outset.85 By making the override mechanism a core feature and creating a closed-loop feedback system, this approach transforms every clinical interaction into an opportunity for learning and system improvement, ensuring the AI evolves in response to real-world needs and failures.

Ultimately, the long-term solution to ensuring equitable AI for health in Africa lies in building local capacity and fostering robust, African-led ecosystems of innovation and governance.49 This involves investing in local data science talent, developing representative local datasets, and establishing national and regional governance frameworks that reflect the continent’s unique priorities. The framework proposed here is a step in that direction—a model for co-designing technology that is shaped by and for the communities it is intended to serve. By prioritizing the human at the center of the system, we can work to ensure that AI fulfills its promise as a transformative force for strengthening health systems and advancing global health equity.

Works cited

- Artificial Intelligence in healthcare – Public Health – European Commission, accessed November 2, 2025, https://health.ec.europa.eu/ehealth-digital-health-and-care/artificial-intelligence-healthcare_en

- Human-Centered AI: What Is Human-Centric Artificial Intelligence? | Lindenwood, accessed November 2, 2025, https://online.lindenwood.edu/blog/human-centered-ai-what-is-human-centric-artificial-intelligence/

- AI-Driven Healthcare: A Survey on Ensuring Fairness and Mitigating Bias – arXiv, accessed November 2, 2025, https://arxiv.org/html/2407.19655v1

- The Role of Artificial Intelligence in Strengthening Healthcare Delivery in Sub-Saharan Africa: Challenges and Opportunities – ResearchGate, accessed November 2, 2025, https://www.researchgate.net/publication/388224732_The_Role_of_Artificial_Intelligence_in_Strengthening_Healthcare_Delivery_in_Sub-Saharan_Africa_Challenges_and_Opportunities

- Healthcare Bias in AI: A Systematic Literature Review – SciTePress, accessed November 2, 2025, https://www.scitepress.org/Papers/2025/134803/134803.pdf

- Bias in medical AI: Implications for clinical decision-making – PMC, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11542778/

- Algorithmic Bias in Health Care Exacerbates Social Inequities—How to Prevent It, accessed November 2, 2025, https://hsph.harvard.edu/exec-ed/news/algorithmic-bias-in-health-care-exacerbates-social-inequities-how-to-prevent-it/

- Addressing bias in big data and AI for health care: A call for open …, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC8515002/

- pmc.ncbi.nlm.nih.gov, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC10132017/#:~:text=Benefits%20of%20HCAI%20in%20Health,of%20individuals%20at%20the%20forefront.

- Human Centered AI – Definition, Benefits, Cons, Applications – LXT | AI, accessed November 2, 2025, https://www.lxt.ai/ai-glossary/human-centered-ai/

- Integrating Human-Centred AI in Clinical Practice – CIEHF, accessed November 2, 2025, https://ergonomics.org.uk/static/f77fceb4-7c26-4eef-98557d15c455422e/91e48e84-cdc2-4db6-827bea5f885a07ad/Integrating-Human-Centred-AI-in-Clinical-Practice.pdf

- Framing the Human-Centered Artificial Intelligence Concepts and Methods: Scoping Review, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC12136509/

- Maintain Human-Centered Focus | AAMC, accessed November 2, 2025, https://www.aamc.org/about-us/mission-areas/medical-education/principles-ai-use/maintain-human-centered-focus

- Appendix for Principles for the Responsible Use of Artificial Intelligence in and for Medical Education | AAMC, accessed November 2, 2025, https://www.aamc.org/about-us/mission-areas/medical-education/principles-ai-use/appendix

- Writing Center Voices | Navigating the Future: AAMC’s Principles for Responsible AI Use in Medical Education | the PULSE | NEOMED, accessed November 2, 2025, https://thepulse.neomed.edu/articles/writing-center-voices-navigating-the-future-aamcs-principles-for-responsible-ai-use-in-medical-education/

- Human-Centered Artificial Intelligence: Reliable, Safe & Trustworthy – arXiv, accessed November 2, 2025, https://arxiv.org/pdf/2002.04087

- Human-Centred AI – Research Features, accessed November 2, 2025, https://researchfeatures.com/wp-content/uploads/2021/04/Ben-Shneiderman.pdf

- IEEE Ethics for AI System Design Training, accessed November 2, 2025, https://standards.ieee.org/about/training/ethics-for-ai-system-design/

- Autonomous and Intelligent Systems (AIS) Standards – IEEE SA, accessed November 2, 2025, https://standards.ieee.org/initiatives/autonomous-intelligence-systems/standards/

- Four Conditions for Building Trusted AI Systems – IEEE Standards Association, accessed November 2, 2025, https://standards.ieee.org/beyond-standards/four-conditions-for-building-trusted-ai-systems/

- Automation Bias & Clinical Judgment: What’s a ‘Reasonable …, accessed November 2, 2025, https://www.western-summit.com/blogs/automation-bias

- Automation bias: a systematic review of frequency, effect mediators …, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC3240751/

- Automation bias – Knowledge and References – Taylor & Francis, accessed November 2, 2025, https://taylorandfrancis.com/knowledge/Medicine_and_healthcare/Psychiatry/Automation_bias/

- Automation bias and verification complexity: a systematic review – Oxford Academic, accessed November 2, 2025, https://academic.oup.com/jamia/article/24/2/423/2631492

- Automation Bias in AI-Assisted Medical Decision-Making under Time Pressure in Computational Pathology – arXiv, accessed November 2, 2025, https://arxiv.org/html/2411.00998v1

- Finding Consensus on Trust in AI in Health Care: Recommendations From a Panel of International Experts – Journal of Medical Internet Research, accessed November 2, 2025, https://www.jmir.org/2025/1/e56306/

- It’s not just a last mile problem. – Making machine learning work in the real world of patient care – Stanford Medicine, accessed November 2, 2025, https://med.stanford.edu/content/dam/sm/healthcare-ai/images/AMIA-2020-Panel-Presentation.pdf

- Trust in Healthcare AI Can Be Hurt Intentionally or Innocuously …, accessed November 2, 2025, https://www.psychologytoday.com/us/blog/patient-trust-matters/202509/trust-in-healthcare-ai-can-be-hurt-intentionally-or-innocuously

- Most people do not trust healthcare systems to use artificial intelligence (AI) responsibly, accessed November 2, 2025, https://www.sph.umn.edu/news/most-people-do-not-trust-healthcare-systems-to-use-artificial-intelligence-ai-responsibly/

- Two-thirds of patients distrust AI in health care | Medical Economics, accessed November 2, 2025, https://www.medicaleconomics.com/view/two-thirds-of-patients-distrust-ai-in-health-care

- Trust and medical AI: the challenges we face and the expertise … – NIH, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC7973477/

- (PDF) Human-AI Interaction Design Standards – ResearchGate, accessed November 2, 2025, https://www.researchgate.net/publication/390115046_Human-AI_Interaction_Design_Standards

- The Psychology Of Trust In AI: A Guide To Measuring And Designing …, accessed November 2, 2025, https://www.smashingmagazine.com/2025/09/psychology-trust-ai-guide-measuring-designing-user-confidence/

- Building trust in healthcare AI: five key insights from the 2025 Future Health Index – Philips, accessed November 2, 2025, https://www.philips.com/a-w/about/news/archive/blogs/innovation-matters/2025/building-trust-in-healthcare-ai-five-key-insights-from-the-2025-future-health-index.html

- Overcoming AI Bias: Understanding, Identifying and Mitigating …, accessed November 2, 2025, https://www.accuray.com/blog/overcoming-ai-bias-understanding-identifying-and-mitigating-algorithmic-bias-in-healthcare/

- Human-Centered Design to Address Biases in Artificial Intelligence …, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC10132017/

- Human-Centered Design to Address Biases in Artificial Intelligence, accessed November 2, 2025, https://www.jmir.org/2023/1/e43251/

- Artificial Intelligence Bias in Health Care: Web-Based Survey, accessed November 2, 2025, https://www.jmir.org/2023/1/e41089/

- AI Algorithms Used in Healthcare Can Perpetuate Bias | Rutgers University-Newark, accessed November 2, 2025, https://www.newark.rutgers.edu/news/ai-algorithms-used-healthcare-can-perpetuate-bias

- AI algorithmic bias in healthcare decision making – Paubox, accessed November 2, 2025, https://www.paubox.com/blog/ai-algorithmic-bias-in-healthcare-decision-making

- Confronting the Mirror: Reflecting on Our Biases Through AI in Health Care, accessed November 2, 2025, https://learn.hms.harvard.edu/insights/all-insights/confronting-mirror-reflecting-our-biases-through-ai-health-care

- Artificial intelligence in global health: An unfair future for health in Sub-Saharan Africa? | Health Affairs Scholar | Oxford Academic, accessed November 2, 2025, https://academic.oup.com/healthaffairsscholar/article/3/2/qxaf023/8002318

- The automation of bias in medical Artificial Intelligence (AI): Decoding the past to create a better future | Request PDF – ResearchGate, accessed November 2, 2025, https://www.researchgate.net/publication/344692802_The_automation_of_bias_in_medical_Artificial_Intelligence_AI_Decoding_the_past_to_create_a_better_future

- Fairness and Bias in Artificial Intelligence: A Brief Survey of Sources, Impacts, and Mitigation Strategies – MDPI, accessed November 2, 2025, https://www.mdpi.com/2413-4155/6/1/3

- Using human factors methods to mitigate bias in artificial intelligence-based clinical decision support – PubMed, accessed November 2, 2025, https://pubmed.ncbi.nlm.nih.gov/39569464

- Using human factors methods to mitigate bias in artificial intelligence-based clinical decision support. | PSNet, accessed November 2, 2025, https://psnet.ahrq.gov/issue/using-human-factors-methods-mitigate-bias-artificial-intelligence-based-clinical-decision

- Joint Commission and Coalition for Health AI Release First-of-Its-Kind Guidance on Responsible AI Use in Healthcare | Polsinelli – JD Supra, accessed November 2, 2025, https://www.jdsupra.com/legalnews/joint-commission-and-coalition-for-4447578/

- Eliminating Racial Bias in Health Care AI: Expert Panel Offers Guidelines, accessed November 2, 2025, https://medicine.yale.edu/news-article/eliminating-racial-bias-in-health-care-ai-expert-panel-offers-guidelines/

- Artificial Intelligence in African Healthcare: Catalyzing Innovation …, accessed November 2, 2025, https://www.preprints.org/manuscript/202506.1824

- Emerging Lessons on AI-Enabled Health Care – ACET, accessed November 2, 2025, https://acetforafrica.org/research-and-analysis/insights-ideas/commentary/emerging-lessons-on-ai-enabled-health-care/

- Artificial Intelligence in Sub-Saharan Africa – Healthcare Report, accessed November 2, 2025, https://aiinafricaresearch.alueducation.com/reports/healthcare/

- (PDF) Overview of Digital Health in Sub-Saharan Africa: Challenges …, accessed November 2, 2025, https://www.researchgate.net/publication/368448907_Overview_of_Digital_Health_in_Sub-Saharan_Africa_Challenges_and_Recommendations

- Digital Health Systems in Africa – IQVIA, accessed November 2, 2025, https://www.iqvia.com/-/media/iqvia/pdfs/mea/white-paper/iqvia-digital-health-system-maturity-in-africa.pdf

- Maternal and Child Health Inequities in Sub-Saharan Africa, accessed November 2, 2025, https://bioengineer.org/maternal-and-child-health-inequities-in-sub-saharan-africa/

- Sub-Saharan Africans Rate Their Health and Health Care Among the Lowest in the World, accessed November 2, 2025, https://spia.princeton.edu/news/sub-saharan-africans-rate-their-health-and-health-care-among-lowest-world

- Lack of Access to Maternal Healthcare in Sub-Saharan Africa – Ballard Brief – BYU, accessed November 2, 2025, https://ballardbrief.byu.edu/issue-briefs/lack-of-access-to-maternal-healthcare-in-sub-saharan-africa

- People In Sub-Saharan Africa Rate Their Health And Health Care Among Lowest In World – PMC – NIH, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC5674528/

- Understanding Health Inequality, Disparity and Inequity in Africa: A Rapid Review of Concepts, Root Causes, and Strategic Solutions – PubMed Central, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC12039359/

- Transforming African Healthcare with AI: Paving the Way for Improved Health Outcomes, accessed November 2, 2025, https://www.jscimedcentral.com/jounal-article-info/Journal-of-Collaborative-Healthcare-and-Translational-Medicine/Transforming-African-Healthcare-with-AI:-Paving-the-Way-for-Improved-Health-Outcomes-11760

- Digital Health Interventions (DHIs) for Health Systems Strengthening in Sub-Saharan Africa: Insights from Ethiopia, Ghana, and Zimbabwe | medRxiv, accessed November 2, 2025, https://www.medrxiv.org/content/10.1101/2025.04.22.25326213v1.full-text

- A situational review of national Digital Health strategy implementation in sub-Saharan Africa, accessed November 2, 2025, https://td-sa.net/index.php/td/article/view/1476/2540

- New report reveals promising potential of digital health in Sub-Saharan Africa – Imperial College London, accessed November 2, 2025, https://www.imperial.ac.uk/news/245155/new-report-reveals-promising-potential-digital/

- Artificial intelligence in global health: An unfair future for health in …, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11823112/

- Blog | Yourway Transport, accessed November 2, 2025, https://www.yourway.com/blog/clinical-trials-in-africa-promoting-diversity-in-medical-research

- Racial and Ethnic Disparities in Access to Medical Advancements and Technologies – KFF, accessed November 2, 2025, https://www.kff.org/racial-equity-and-health-policy/racial-and-ethnic-disparities-in-access-to-medical-advancements-and-technologies/

- Tackling the lack of diversity in health research – British Journal of General Practice |, accessed November 2, 2025, https://bjgp.org/content/72/722/444

- Addressing the Underrepresentation of Minority Data in Clinical Trials: A Call for Action, accessed November 2, 2025, https://oatmealhealth.com/addressing-the-underrepresentation-of-minority-data-in-clinical-trials-a-call-for-action/

- Challenges of Africa’s Representation in Clinical Trials, accessed November 2, 2025, https://xceneinnovate.com/africas-representation-in-clinical-trials/

- (PDF) Challenges of Implementing AI in Low-Resource Healthcare Settings – ResearchGate, accessed November 2, 2025, https://www.researchgate.net/publication/394275718_Challenges_of_Implementing_AI_in_Low-Resource_Healthcare_Settings

- Collaborative report unveils the transformative role of Artificial …, accessed November 2, 2025, https://scienceforafrica.foundation/media-center/collaborative-report-unveils-transformative-role-artificial-intelligence-and-data

- The Healthcare Crisis in Sub-Saharan Africa, accessed November 2, 2025, https://africanmissionhealthcare.org/the-healthcare-crisis-in-sub-saharan-africa/

- Systematic review: Decentralised health information systems implementation in sub-Saharan Africa | Ogundaini, accessed November 2, 2025, https://td-sa.net/index.php/td/article/view/1216/2148

- (PDF) Developing user-centered system design guidelines for …, accessed November 2, 2025, https://www.researchgate.net/publication/396620659_Developing_user-centered_system_design_guidelines_for_explainable_AI_a_systematic_literature_review

- Senate Proposal Aims to Establish AI ‘Override’ Button in Healthcare …, accessed November 2, 2025, https://www.meritalk.com/articles/senate-proposal-aims-to-establish-ai-override-button-in-healthcare/

- Explainable AI in Clinical Decision Support Systems: A Meta-Analysis of Methods, Applications, and Usability Challenges – PMC – PubMed Central, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC12427955/

- Survey of Explainable AI Techniques in Healthcare – MDPI, accessed November 2, 2025, https://www.mdpi.com/1424-8220/23/2/634

- The Promise of Explainable AI in Digital Health for Precision Medicine: A Systematic Review, accessed November 2, 2025, https://www.mdpi.com/2075-4426/14/3/277

- What is the focus of XAI in UI design? Prioritizing UI design principles for enhancing XAI user experience – arXiv, accessed November 2, 2025, https://arxiv.org/html/2402.13939v1

- (PDF) Community Participation in Health Informatics in Africa: An …, accessed November 2, 2025, https://www.researchgate.net/publication/220168997_Community_Participation_in_Health_Informatics_in_Africa_An_Experiment_in_Tripartite_Partnership_in_Ile-Ife_Nigeria

- A Practical Guide to Participatory Design Sessions for the Development of Information Visualizations: Tutorial, accessed November 2, 2025, https://jopm.jmir.org/2024/1/e64508

- A rapid review of digital approaches for the participatory development of health-related interventions – PMC – PubMed Central, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC11638186/

- The potential use of digital health technologies in the African context: a systematic review of evidence from Ethiopia – PMC – PubMed Central, accessed November 2, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC8371011/

- Using Information and Communication Technologies to Engage Citizens in Health System Governance in Burkina Faso: Protocol for Action Research, accessed November 2, 2025, https://www.researchprotocols.org/2021/11/e28780/

Leave Your Comment